ExoIntelligence vs Artificial Intelligence

Does AI Have to Be Dangerous?

Boris Karloff as Frankenstein’s Monster

In 1817, Mary Shelley wrote her startling novel “Frankenstein.” This gruesome tale struck a nerve in society at the time and for many years thereafter. That fear has rippled right through to modern times. The whole idea that an intelligent entity of artificial creation could exist and that it could (and would) harm people was a frightening concept that has frightened millions of people since then.

In the 1930s and ’40s, many science fiction writers wrote about robots that would turn against their human creators and kill or destroy everything that was around them. They were continuing the Frankenstein concept. However, one author at the time, Isaac Asimov, questioned this approach and postulated the existence of robots that would be helpful to mankind.

This was a very different idea at the time as it was completely counter to the views of most people. To support his concepts in this area, Asimov wrote the Three Laws of Robotics, which basically stated that all robots would have certain basic instructions hard-wired into their programming. These instructions, or “laws,” stated that robots could never harm a human being or allow one to come to harm. And as a secondary (and junior) law, they were also bound to follow the orders given by humans.

So, what Asimov did was to head off the potential for robots to do any of the dangerous things that other writers were portraying. These laws also gave humans the ability to override any aberrant activity they observed. This would then make robots (artificial intelligences or AIs) capable of being of tremendous help to mankind, without running the risk of stimulating the “Frankenstein Complex” that was so prevalent at the time.

But the fear of AI has persisted, even so. Just look at the movies that cover the subject of artificial intelligence:

- HAL, the computer in Arthur C. Clarke’s “2001: A Space Odyssey,” went crazy and killed several of the crew of their spaceship.

- The “Terminator” series of movies depicted a whole computer network that went “intelligent” and took over the world, attempting to kill all human life.

- “The Matrix” told a similar story of some sort of programming that became “intelligent” and relegated human bodies to becoming crude power batteries to drive the AI network.

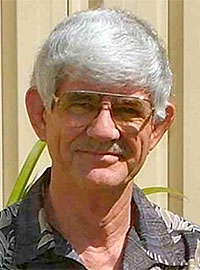

Above: Author Neil Clark introduces science fiction writer Arthur C. Clarke to the IBM personal computer at its launch in Sri Lanka in 1984.

Even Asimov was misquoted in the movie “I Robot,” which showed a robot gone mad and becoming very dangerous. The original short story that this movie was based upon had a totally different slant and was used to highlight how Asimov’s Laws of Robotics were still valid in such circumstances and would still protect humanity even when there was aberrant robot behavior. Not so in the movie, which left you with the same devastating conclusion that artificial intelligence was dangerous. Perhaps that sells more movie tickets.…

But, here’s the thing! There’s a line you can draw in the sand that clearly separates out the dangerous aspects of AI. Think about it: we are all somewhat afraid of self-driving cars going out of control and crashing, or of commercial airliners with autopilots making fatal decisions, killing hundreds of people.

Artificial intelligence, however, has the power and potential to be tremendously helpful. So, how do we take advantage of this excellent potential without exposing ourselves to the dangerous aspects that have frightened people for centuries?

The answer is volition. It’s simply a matter of whether the artificial entity has the ability to make decisions on its own based on what it sees as the correct actions to take. As soon as you give an artificial entity the ability to make its own decisions about what to do, you have crossed that line.

As soon as you give an artificial entity the ability to decide what to do and to act on those decisions, you have exposed yourself to all the bad aspects of robots and artificially intelligent computers that have been written about in the past.

However, what if you do not cross that line? What if you use all of the clever power and functionality that can be derived from computer programming to actually assist you in your daily activities, but not give it any volition to act on its own? What do you finish up with?

Well, what you end up with is a highly intelligent entity that understands you and can carry out instructions with a minimum of fuss. In fact, in the extreme, you finish up with an intelligent entity that you can interact with in much the same way as you interact with other people. But you do not have the risk of this “artificial entity” running off on its own to kill all your family or try to take over the world.

Consider this. You ask your friend (human): “What’s Bill’s phone number again?” Your friend may say: “Do you mean Bill Bloggs?”; and when you say yes, if he knows the number, he will give it to you.

Try that with your computer today. Well, for a start, you can’t talk to it. And if you type the question on the screen, you’ll get no response. You have to decide which program has the phone numbers in it, then you have to start that program, then you have to search for Bill Bloggs, and finally you have the number.

But what if you had access to an artificially intelligent entity? One that had all the power of these robots and future computer programs that have been envisioned? But without the volition necessary for it to go off on its own tangent? What would you have?

You would have an intelligent entity that would interact with you in a similar manner to that of another human. You could ask it: “What’s Bill’s phone number again?” and it may well say: “Which Bill do you mean – I have several.” And when you confirm that it’s Bill Bloggs, the artificial entity would come straight back with the phone number. The entity would have been smart enough to find the phone number in its record store without your having to tell it how to do that.

It takes enormous power and ingenuity to create an artificially intelligent entity that can do all the things that robots and future computer systems have been attributed with. And if you can harness all that power and ingenuity in such a way that it has NO VOLITION whatsoever (to go off on its own path), you have something that really can help mankind. And it’s not something we need to be afraid of. We don’t even need Asimov’s Laws of Robotics to protect us because, without volition, there is no possibility of the entity producing dangerous results from which we need to protect ourselves.

So, is Artificial Intelligence dangerous? In the forms of self-driving cars and aircraft autopilots, it probably is. No matter how much effort is put into making them “safe,” there will always be a way in which an entity (that can act on its own decisions) will make deadly mistakes. Volition is the key.

But what if you remove all the capabilities of volition from the entity? What if you retain the incredible power and functionality, combined with language recognition? If you do that, you have a highly valuable and completely safe solution. In fact, you have ExoTech.

ExoTech is not “Artificial Intelligence”; it is “ExoIntelligence.”